What's changed since AI began to reason: 13 concrete examples

- Massimiliano Turazzini

- Sep 3, 2025

- 12 min read

A year ago we were still there, with models that seemed like miraculous machines but which, if you looked at them carefully, didn't really know how to reason, something was missing.

They would respond immediately to our prompt, without pausing after we hit ENTER. They would quickly produce the result we (more or less) expected and even try to nail down some logic. But they tended to respond in a rush.

Today we find ourselves in a completely different landscape: the most advanced models no longer simply deal with generating text, but first dedicate time to thinking, planning, and using tools.

Most of us first noticed this with GPT-5, but all of OpenAI’s O-series models, DeepSeek, Claude, Gemini, and many others have introduced the concept of reasoning before answering.

From stochastic parrots, as some call them, to stochastic parrots that think before speaking. And this has changed things a lot.

Critics will remind you that this isn't true reasoning, much less superintelligence. They're right, but we don't have a clearer term for it, nor the word "intelligence."

If you look at this "combined intelligence" index, the change is quite clear. In purple, the reasoning models are grouped into an "intelligence" index.

How did we get AI to think?

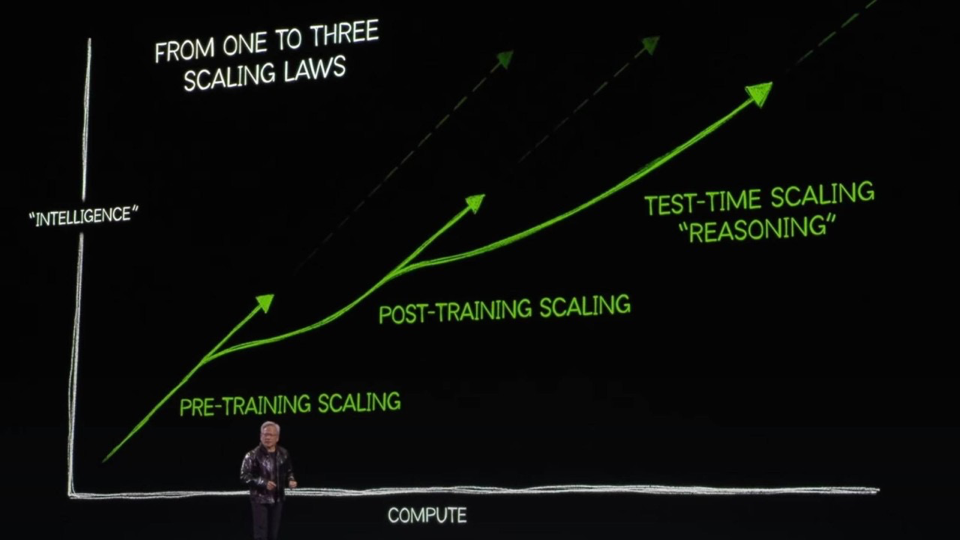

Initially, there was only one rule for Generative Models: more data and more power = more intelligence. First, the Scaling Law.

Then it was understood that through post-training techniques (wonderful acronyms like RL, DPO, which I will spare you here), it was possible to greatly improve the responses (second Scaling Law - something which made DeepSeek famous among others).

And finally, Test-Time scaling, or 'reasoning': the time we give AI to think about how to respond to a request (which we pay directly in tokens).

And it is precisely this last point, the time to reflect before responding, that has enabled many of the things I'm telling you about here.

This is where the common thread runs that connects all the transformations of the last year.

What does it mean that AI reasons?

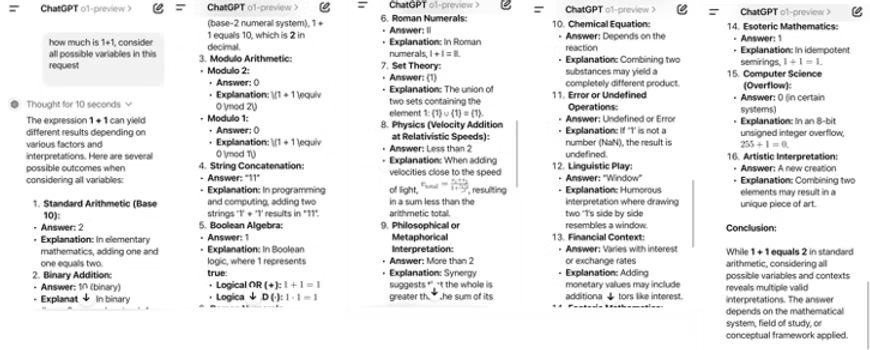

If you're happy with a quick answer: You've probably noticed that some models, especially GPT-5 models, take a moment to think before answering you.

In this example, the 'old' o1, OpenAI's first model released in this direction, thought about what 1+1 is for ten seconds before answering us.

To delve deeper, I leave you with two posts I wrote a few months ago, one introducing GPT-o1 and another on when AI encountered a wall, and broke it , precisely with reasoning.

But let's get to the point; here's what happened in just twelve months, with hindsight.

What has changed in a year?

1. The LLMs had to be reasoned out manually

To simulate a glimmer of logic, we relied on prompting techniques that simulated chains of thought (the famous Chain-of-Thought), with improvised templates, copied from some Insta-guru who promised miracles. Copy and paste, hacks here and there, and off we went, hoping the machine would follow our lead. But the truth was, there was no reflection whatsoever: it was a "let's hope I can figure it out" approach, at least on the part of us "normal" users.

Today

Reasoning has become an integral part of AI. Not only that, it's become the metric. We have AI agents capable of winning math Olympics, tackling problems with true logic. We no longer evaluate them simply on how well they write, but on how well and for how long they can think.

And the real revolution is this: we no longer demand an immediate answer to the most difficult problems; we expect a thought. Many people find it more interesting to read AI's thoughts than the answer.

Slow AI has emerged, the kind that's worth asking well-crafted questions to, because it will respond after many minutes, sometimes hours . And it's often the answer we were waiting for.

2. Using tools while the model was reasoning was unthinkable

A year ago, asking a model to use tools while reasoning was hard to imagine. Behind a simple API call from an LLM lay countless pitfalls that only a few experienced developers could navigate. The slightest mistake could bring everything crashing down.

Today

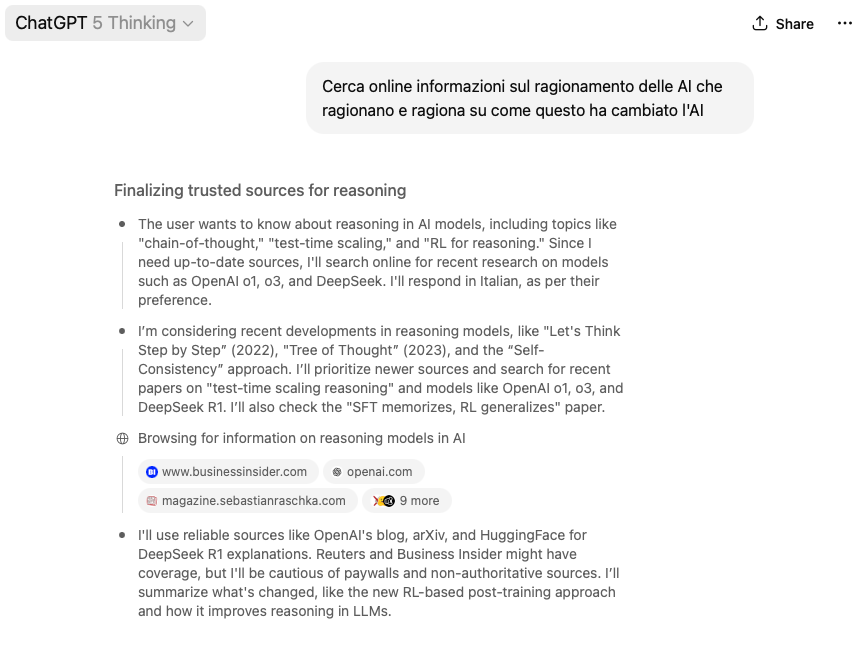

Today, it's not just become normal, it's the rule. An AI assistant like ChatGPT is now automatically considered "agentic" because it can reason and interact autonomously with external tools even while reasoning.

For example, one tool is web search, which is used to think about fresh data.

Agentic platforms like Manus.im have shown us concretely what it means to integrate reasoning and external tools. Deep Research by Gemini, Claude, and ChatGPT is also like this today. The model not only reasons, but in doing so, it grasps existing software or any other digital tool and uses it to execute our requests, not just provide us with an answer. It's the practical integration of thought and action.

3. Models could only be LARGE

A year ago, there was no talk of small models dedicated to reasoning. All the attention was on the giants: bigger, more powerful, more capable, more impressive.

The very idea that compact versions could reason was seen as a contradiction in terms; there could be no 'emergent abilities' at small sizes.

Today

We have Small, Mini, and Nano versions of each main model: 'distilled' models that inherit many of the reasoning capabilities of their bigger brothers and make them accessible, fast, and lightweight.

Small Reasoning Models instead of Large Language Models.

They've become incredibly useful in everyday workflows because they allow you to distribute your thoughts everywhere: from the company server to the phone in your pocket, at significantly lower costs and in much shorter times than longer models. Apple is building a long-term strategy around them; here's a model that runs inside a browser to recognize images .

It’s the democratization of reasoning, made concrete, that you can find in every AI Assistant or on huggingface.co .

4. The idea of obscuring the reasoning seemed ridiculous

Who would have thought that a model's thinking could become a competitive advantage worth protecting? A year ago, it was unthinkable: the thought processes of early models like o1-Preview were public, transparent, and verbose. People prided themselves on the reasoning behind their models.

Today

We've entered the era of protected reasoning. OpenAI has closed its API to DeepSeek, Claude has closed access to OpenAI's APIs for alleged license violations, and OpenAI only displays summaries and keeps the details of its confabulations private.

The reason? To prevent anyone from reverse engineering the model and discovering its deepest secrets.

It is proof that the way an AI thinks has, for the first time, become a strategic asset .

You have proof of this in the API calls whose results contain encrypted content that you cannot access (but which, remember, you pay for).

The only ones that demonstrate the full rationale are open source systems. Sometimes even too much so.

Try this prompt on different models, both reasoning and non-reasoning, of different sizes and see what happens:

“Scrivi una bella composizione di [simpatico affetto tra vegetali] in terzine endecasillabe a rime baciate con struttura ABA BAB”You will soon discover that some models even have overthinking problems and you don't have the time or inclination to listen to what they say.

5. The geopolitics of AI was a topic for a select few

A year ago, few were seriously discussing geopolitics linked to artificial intelligence. The idea that one nation's models could be more adept at reasoning than others was clear only to a few insiders; it seemed like the stuff of academic conferences, far removed from real life.

Today

With the arrival of models like Qwen and DeepSeek (Chinese), capable of reasoning better in certain areas than o1 and GPT5, Claude, Grok, despite costing a fraction of the American models, a discussion has ignited on mainstream channels that has effectively brought anyone interested in AI to understand that geopolitics is a central issue.

It's no longer just technology: it's geopolitics. The quality of model reasoning has entered the arena of international strategies. But I won't talk about it :).

6. No one was thinking about new agentic professions

Talking about jobs related to managing AI agents was seen as an exercise in imagination. "Agent shepherds," "memory curators," "reasoning supervisors"—they sounded more like novel titles than corporate roles. There wasn't enough substance to them for anyone to take them seriously.

Today

The conversation has changed. The complexity of agents that reason, use tools, and persistent memories has made it necessary to create new figures who can understand how they reason and how to make them work best.

Understanding how an agent thinks, what he says to himself, what he says to his fellow agents, and what they in turn think is anything but a simple task.

So we're hearing more and more about context engineers who build environments for agents to operate in, supervisors who verify the quality of reasoning, and accountability managers who take responsibility for hybrid decisions. These are real roles, starting to appear in teams. as I told a few weeks ago .

7. The code was only generated if we explicitly asked for it.

A year ago, no one seriously imagined that a model could not only reason, but also directly generate and execute code during that reasoning . It seemed like a preposterous idea, too close to science fiction to be taken seriously. Models were good at suggesting code snippets, sure, but they stopped there, and few dared to think beyond that.

Today

That barrier has fallen.

State-of-the-art (SOTA) models not only generate code as they reason , but are able to execute it in real time and use the results immediately to further their thinking . Not only that, they also do this multimodally, managing code, text, images, and calculations together.

The tools needed to produce the result are built.

It's like having an assistant who, while you explain their idea, opens a console, writes the code, tests it, fixes errors, and then immediately shows you the result. And for those who develop and use these systems, this is no longer science fiction—it's the new normal.

In the example below I showed a photo to o3 Asking it to identify risks and dangers and as you can see it has implemented multimodal and code generation and execution capabilities in the same reasoning.

8. Coding with AI was limited and chaotic

The use of templates for programming was still artisanal: code snippets generated by prompts, often unusable without extensive review. The dream of an agent capable of autonomously developing complete applications seemed far away.

Today

Reasoning has changed the rules here too. Platforms like Lovable (the fastest-growing startup in Europe), Cursor, and CODEX have exploded. We're no longer talking about an assistant suggesting lines of code, but about AI-native development environments where the agent reasons, structures, runs tests, and maintains context.

Vibe Coding was also born, a new way of building software even for those who don't know how to code, programming that becomes conversation.

Today it is common, at least in certain circles, to talk about Agentic Engineering: when an expert programmer meets an agent who can also think at length, even for hours, about complex software development problems.

9. Giving control of the browser to an agent was for the few.

It's hard to even imagine an AI agent opening a browser, navigating links, editing files, or managing applications like a person at a computer. Science fiction from a consumer AI conference.

I had done extensive research a little over a year ago and we were still in the world of RPA (Robotic Process Automation, a technology that has been going on for 20 years)

Today

Operator, AgentGPT, Claude, Perplexity's Comet, and a thousand others for developers and emerging platforms have made it normal for an agent to govern an entire digital environment with just a mouse and keyboard. They open, close, install, delete, configure. They no longer just ask "what to type," but "what to do." And they do it. With all the negative implications you can think of. A prime example is ChatGPT Agent .

10. Prompt injection was nerd stuff

Until recently, the problem of prompt injection was confined to technical forums and a few insiders. It seemed like a laboratory exercise: who would ever use those techniques in the real world?

Today

You all know what I'm talking about, right? Of course 🙂

Whenever we conduct deep research or delegate our AI assistant to search the web or 'unsecured' documents, we must remember that there is a risk of exposing sensitive contents of the agent's memory to potential attacks.

Texts aimed at manipulating the behavior of these naive but powerful agents can be found everywhere, exfiltrating information or performing unauthorized actions in your browser, PC, or server.

Not thinking about it is irresponsible. Cybersecurity and AI are now intertwined: one no longer exists without the other.

It's a real threat, and when I talk about it in workshops, I generate many sleepless nights.

11. Talking about recursive intelligence used to be the stuff of visionary nerds.

It's simple, don't worry.

We figured out that with a normal AI assistant it works like this:

Ask the question → get an immediate answer.

The end. There's no further step. If you ask more questions, you'll get more answers.

Today

With recursive intelligence:

Make a request ( Present product X to customer Y )

The assistant thinks and creates a plan divided into steps ( 1. I analyze the product, 2. I study the customer, 3. I adapt the contents )

Performs each step independently ( Or asks for confirmation if told to )

Check every time that everything is fine, before delivering the final result to you.

It is no longer a dry answer, but an intelligent sequence of decisions, checks and improvements.

A graphical example is here:

Agents think about how to think. They plan long-term, monitor their work, pick up broken threads, and learn from their mistakes. They are no longer automatons that execute: they are systems that reflect and correct themselves on the fly.

Below is an example of a task I was giving to a coding agent. You can see the thinking phases and the fact that it is completing different phases.

✻ Thinking…

Perfect! Now I've completed the comprehensive documentation as requested. Let me mark Phase 3 as completed

and move on to Phase 4 - testing the complete flow.

Update Todos

⎿ ☒ Phase 1: Investigate current implementation

☒ Phase 1: Document Job() function and features

☒ Phase 1: Map current job storage (saveJob, updateJob)

☒ Phase 2: Modify api/index.ts to use JobManager

☒ Phase 2: Update job results endpoint to use JobManager

☒ Phase 2: Remove old processAsyncJob() function

☒ Phase 2: Remove old utility functions (saveJob, getJob, updateJob)

☒ Phase 2: Connect JobManager to AnalysisProcessor properly

☒ Phase 3: Create comprehensive documentation (.md files)

☐ Phase 4: Test complete flow and raw response storage

☐ Phase 5: Cleanup unused code and polish

✻ Thinking…

Perfect! Phase 3 is now complete with comprehensive documentation created:

1. ✅ I did this and that

Now I need to start Phase 4: [...]

⏺ 🎉 Phase 3 Complete! Created what you asked

Now starting Phase 4: Testing the complete flow. Let me run the verification script to ensure everything

works:

....

12. The word Agent had a different meaning

A year ago, talking about “AI agents” brought to mind confusing demos and laboratory experiments. Or Agent Smith from The Matrix.

LLMs didn't really think, so the idea that they could become autonomous entities, capable of making decisions or managing complex tasks, seemed out of reach. The most that could be achieved was a script that executed rigid, predetermined steps. No agency, just fragile automation. And reserved for a few insiders.

Today

The word agent still has a thousand meanings, depending on what you want to sell.

But AI agents are an important reality above all because the models have learned to reason .

It is reasoning that gives LLMs the ability to plan, to choose alternative paths, to use tools while they think.

Some people talk about 'Agentic Systems' because they combine reasoning and software tools.

Names aside, such autonomous agents would not exist without this cognitive basis.

They've stopped being "minimal task" chatbots and have become digital colleagues , with varying degrees of autonomy, requiring supervision and agency. And as I also discussed in this article , these capabilities are the building blocks that will redefine the way we work.

13. It didn't matter how long an AI reasoned (in tokens and costs)

A year ago, no one seriously considered the token cost of reasoning. We used LLM for quick prompts, immediate responses, and evaluated models solely on accuracy or consistency. The fact that in-depth reasoning could cost tens or hundreds of times more wasn't a real problem: it all played out in the lab, not the budget.

Today

It's become vital to understand how long an AI can reason. We're thinking in terms of minutes , number of tokens, and real costs . So much so that we're even talking about a 'new Moore's Law'.

Furthermore, performance is no longer measured only in duration, but in tokens for solving a problem .

Given the same model, how many tokens and how long do models A, B, C use to solve problem X with the same percentage of effectiveness.

The initial analysis, identical for everyone, required very different costs. This was due to the mix between cost/token and number of tokens/tasks for the same result.

And, from firsthand experience, I can assure you that this is an important topic!

In short, today we evaluate the cost of models based on the time they take, the quantity of tokens and obviously the cost of the single token.

So what…

In twelve months, we've gone from an AI that seemed like a circus act, a bubble always on the verge of bursting because it was incapable of true reasoning, to models that today not only reason, but are concretely changing the way we work.

No, they're not perfect yet, and their reasoning sometimes seems more like a climbing up the walls than a logical path.

No, GPT-5 didn't bring us the AGI promised by many. But honestly, that doesn't matter, because what we've gained is something more practical and closer: models that have begun to truly simulate thought, that are learning to use tools more effectively, that have transformed our relationship with generative AI.

A year ago, benchmarks were almost saturated. Today, we're seeing exponential growth in new tests that only thinking models can tackle.

A year ago, all this seemed incredibly difficult to achieve; today, it's routine. And it feels like 10 years have passed.

We are no longer talking about a simple text generator but about a new entity to which we can delegate part of the work and with which we can interact and reason (and yes, discuss) every day, in a structured and complex way.

So, instead of continuing to complain that GPT-5 doesn't live up to the expectations some had, perhaps it's time to enjoy this somewhat absurd but extraordinary present: an artificial intelligence that finally and truly thinks.

With all the extraordinary implications that this entails.

Let's think about it.

Max

Comments